I Dedicate this project to my favourite Mensa in Germany. I love you deeply with all my heart. Thank you for all the budget menu and frozen food recommendations. The food price is so low, just like my body weight.

This post is to share the walkthrough of this project’s development. For project details, please visit the repository MensaarLecker

🍽 🥨 MensaarLecker – A beloved tool to find out Mensa Ladies’ favourite menu 🥨 🍽

Motivation

Me and my friends hatelove the UdS Mensa so much! The infinite frozen food and french fries menus give us so much energy and motivation for the 5-hour afternoon coding marathon. However, no one actually knows how many potatoes they have exterminated throughout the week. We have a genius webpage created by some Schnitzel lover. Personally, I like its minimalistic layout and determination on Schnitzel searching.

However, we want more.

It’s not just Schnitzel; we want to know everything about their menu. We want to know what’s inside the mensa ladies’ brains when they design next week’s menu.

The desire never ends. We need more data, more details, more, More, MORE!

Description

MensaarLecker is a statistic that collects all the menus’ occurrences and provides daily menu information. It contains:

- A web scraper that collects the daily menu of the Mensa in Saarland University.

- A statistic that calculates each item’s (main dish & side dish) occurrence and percentage.

Developing Process

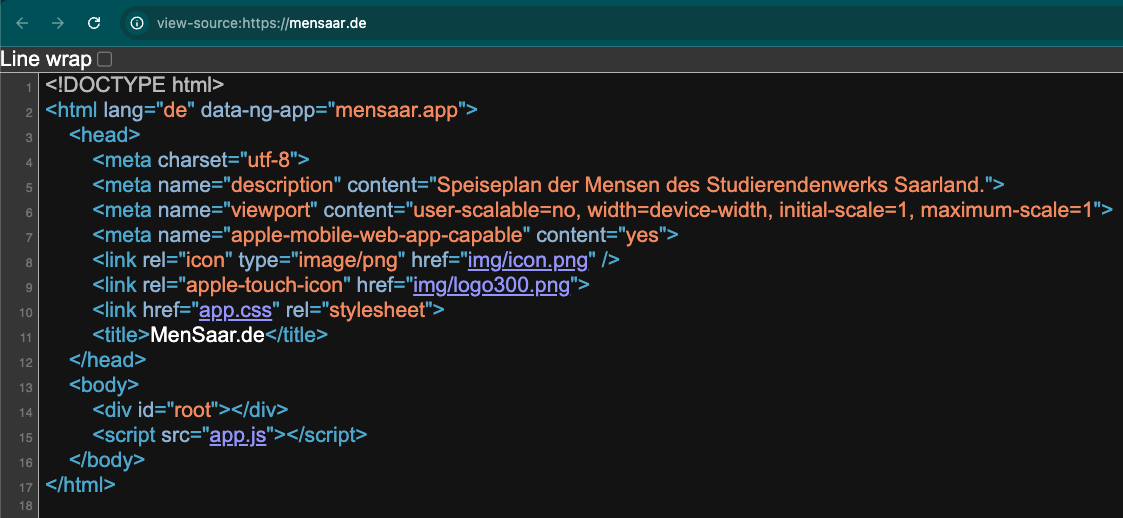

Beautiful Soup

I started my journey with Beautiful Soup, one of the most popular Python web scraper packages. However, as a Uni that is well-known for its computer science program, all the menus are rendered using JavaScript. And beautiful can only scrape HTML and XML tags. So the scraper can only see an empty skeleton page:

Selenium

Basically, Selenium is a Webdriver that opens a browser naturally, like a human user. Then from there we can scrape the rendered information. Things get simpler once we can see the website as we see it on the browser. We just need to find the tag that contains the information we need and save it for storage.

Scraping

| data we need | tag |

|---|---|

| menus | <div class="counter"> |

| date | <div class="cursor-pointer active list-group-item"> |

| main dish | <span class="meal-title"> |

| side dish | <div class="component"> |

The first part of the task is to get the daily menu. We also get the date on the website to make the following work easier.

By the find_element and find_elements functions in Selenium, we can create a simple scraper like this:

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

driver = webdriver.Firefox()

driver.get("https://mensaar.de/#/menu/sb")

counters = driver.find_elements(By.CLASS_NAME, "counter")

for counter in counters:

meal_title = meal.find_element(By.CLASS_NAME, "meal-title").text.strip()

However, on the webpage there is also a counter called Wahlessen. Which refers to a more pricy and unpredictable menu, and we want to exclude its data:

counter_title = counter.find_element(By.CLASS_NAME, "counter-title").text.strip()

# Filter for specified counter titles

if counter_title in ["Menü 1", "Menü 2", "Mensacafé"]:

meal_title = meal.find_element(By.CLASS_NAME, "meal-title").text.strip()

Storage

It is kinda pointless to make a full database for these small piece of data (I may take my word back in the future), so we just store the collected data in Json files.

Therefore, everyday we will have a separate Json file. We create a separate Json file for statistics, it is calculated simply by adding current date’s data into the file, so it will accumlate each data entry.

with open(output_file, "w", encoding="utf-8") as f:

json.dump(result, f, ensure_ascii=False, indent=2)

print(f"Results saved to {output_file}")

# Save the updated occurrence counts to the JSON file

count_result = {

"meal_counts": dict(meal_count),

"component_counts": dict(component_count)

}

with open(count_file, "w", encoding="utf-8") as f:

json.dump(count_result, f, ensure_ascii=False, indent=2)

print(f"Counts saved to {count_file}")

{

"meal_counts": {

"KlimaTeller: Broccoli und Cheese Nuggets mit Chili-Kräuterdip": 1,

"Hähnchenschnitzel „Wiener Art“ mit Paprikarahmsoße": 1,

"Flammkuchen mit Tomaten und Rucola": 1,

"Köttbullar und Rahmsoße mit Champignons": 1,

"KlimaTeller: Türkische Pizza Lahmacun": 1,

"KlimaTeller: Hausgemachte vegetarische Kartoffelsuppe": 1,

"Pasta mit Argentinischer Hackfleischsoße": 1,

},

"component_counts": {

"Pommes Frites": 1,

"Karottensalat": 2,

"Reis (aus biologischem Anbau)": 2,

"Weiße Salatsoße Dressing": 6,

"Obst (Apfel aus biologischem Anbau)": 6,

"Brötchen": 4,

"Wiener Würstchen": 1

}

}

Automation

The part that gives me headache…

I tried to use GitHub Workflow to run the Python script daily, but it didn’t work out… So for my next step, I may create automation locally and connect the folder to the repository through GitHub Desktop.

Another solution maybe transfer the Json storage into standard database like MongoDB.

Please cite the source for reprints, feel free to verify the sources cited in the article, and point out any errors or lack of clarity of expression. You can comment in the comments section below or email to GreenMeeple@yahoo.com